Wikipedia describes a Personal Assistant as a person who assists you with your daily business or personal tasks. A Digital Personal Assistant on the other hand can be described as a software agent that can perform tasks or services for an individual based on commands or questions.

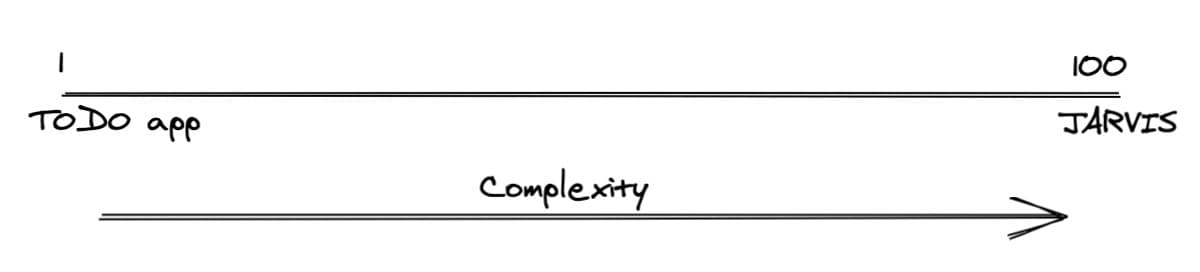

I always thought about what a digital personal assistant would help me with? On one end of the spectrum, we can even consider a simple TODO app as an assistant that helps you manage and recollect things you want to complete. On the other end, you have the famous Iron-Man’s personal AI-based assistant J.A.R.V.I.S. (Just A Rather Very Intelligent System). Whenever I thought of build something on this line, the common obstacle was to figure out where to start and the technical complexities which lies ahead at each step.

Anyhow, I began working on this and to overcome the technical complexities, I treated this project as my learning playground. Whatever topic I wish to learn, I learn it and apply those learnings back in here. As a result, this every-growing project has helped me grasp a lot of understanding behind IoT, Networking, Machine Learning, and AI.

The idea of this post is to show what I have been able to build so far, get feedback and, help inspire others to build. Although, what I have here is maybe 0.1% of what JARVIS can do in the movies; it is a start nonetheless.

Establishing an Identity

To ensure the fate of this project doesn’t end with other failed side-projects of mine, what helped is assigning an Identity to this project. JARVIS was already too mainstream, and it’s hard to name things anyways, so I thought of calling it the next best thing - Vision.

Having an established Identity also helped me to not think of Vision as some program that lives in the code terminal, but to give it all forms of expression. Be it having a display to show what goes on behind the scenes, to having a voice, giving it an ability to see stuff and, adding a bit of humor.

Assembling the Hardware

CPU and Peripherals

Vision currently runs on a Raspberry Pi 3 B+ (RPI) single-board computer. The powerful CPU coupled with Wireless LAN and Bluetooth 4.1 radio made it an ideal candidate for this project. RPI has a good support to connect external peripherals to extend the capabilities.

- For audio capabilities, I connected the RPI to a speaker system and a microphone.

- For the display out, I plugged in an old LDC monitor to the inbuilt HDMI socket.

- To provide visual support, RPI has an official camera module that can be attached to the inbuilt camera slot using a ribbon cable. It has an 8-megapixel sensor which is sufficient for still photographs and video recording.

Cooling System

To keep the system up and running 24x7, I had to ensure there was optimum cooling support. Although RPI is programmed to throttle the clock speed to maintain a safe temperature, I couldn’t trust this with the hot and humid weather of India where the temperature reaches 45-48 °C in Summer. I glued some Aluminium heat sinks with thermal tape for some extra heat dissipation. Then, I mounted this assembly inside a case and attached a 5V heat radiator fan. This configuration kept the core temperature of the RPI ~55 °C. On earlier attempts, without this cooling setup, the temperature rose to 80 °C when kept running for more than 24hrs.

External Frame

The hardware setup more or less done and I was left with a tangled mess of wires and cables! The next task was to assemble everything up in a neat way and giving Vision a sturdy enclosure - a body. Although I wanted to go totally overboard with this idea, I had to curb my intentions given my poor hardware skills. Looking for some ideas, I stumbled upon a project named Magic Mirror which involves the idea of housing the RPI set up in front of a 2-way mirror and a LCD screen.

I drew up some schematics of the frame and visited a local carpenter to help me build a wooden frame. Once the frame was ready, I Installed a 2-way mirror in the frame and fixed the LCD behind it. This way if the LCD is off, the frame acts as a normal mirror. On powering on the screen, the 2-way property of the mirror allows what’s behind the screen to show up on the Mirror!

The final steps were to add some electrical switches on the frame, conceal all the hardware cables, and hanging it on a wall.

Coding the Software

Once the structure was built, it was time to program it.

Interfaces

Vision currently has the following ways to enable interaction with the outside world.

-

Command Center

The LCD screen gave me a big real estate to show up different data points that I frequently consumed throughout the day. I found a great open-source software named Magic Mirror which provides an Electron App with a widget-based interface. There are amazing community built widgets which can be directly used. I authored a few widgets that helped solved some particular use-cases of mine. -

Mobile App

To make Vision accessible by anyone in our Home local network, there's an App hosted on LAN that allows me to have 2-way communication with it. The app helps me relay information in form of chat and voice commands. There are custom widgets that helps play songs on Spotify and interact with all the connected Smart lamps. -

Natural Language Voice Interface

There's a mic housed inside the command center casing to speak to Vision. The natural language processing is provided by Dialogflow. It uses machine learning to extract the intent behind the given commands. These intents then are mapped to the various commands and actions. -

Natural Language Instant Messaging Interface

Anything that can be spoken can be typed too. The same parsing system allows me to extract the intent from the chat messages. This comes real handy when the microphone is out of reach.Recently, I was able to get access to Open AI's GPT3 services. I integrated the same in Vision for some extra quirks.

Controls

These are the current set of tasks Vision can perform.

-

Weather and forecast:

Shows latest weather updates and weekly forecast. The APIs are powered by OpenWeather. -

Newsfeed

Displays the latest headlines - AdFree. News is displayed by pooling the RSS feeds of different News publishers -

Realtime Air Quality Meter

Living in the most polluted city in the world is not easy. Our condominium has installed an AQI meter to inform the residents about the air quality via an App. This module pools the app servers to fetch the real-time AQI that helps me identify when it is safe to step out of the house and maybe go for a run. -

Realtime Traffic updates

There has to be a source of the deadly air quality. A major contributor to it is pollution from excessive traffic. There have been many instances where I found myself dead stuck in a traffic jam as soon as I left for work. This widget uses the Google Maps APIs to help me find the right time to leave for some preset destinations. -

Daily meal planner

One of the easiest ways to stick to a healthy diet is to plan your meals! My wife and I prepare a weekly meal plan which helps us plan for the diet earlier and ensures we have the ingredients ready. The data is pulled from an Airtable and displayed on the Command Center. -

Calendar notifications

This module fetches the latest events from our family calendar and announces them 20 mins prior. To make the notification interesting, I made Vision announce them in its voice. -

Spotify Player

Vision runs a Spotify connect client which makes it visible on the Spotify app. This enables every in the family account play music to the attached music system. -

Mood Setter

At 7:00 AM Vision plays one Spotify playlist from a pre-curated list to set the morning mood. The same program runs at 8:30 PM to help us wind down after a long day. -

Lighting control

Vision is connected to all the Smart Lamps in our House. It can power On/Off, toggle brightness, and set preprogrammed moods to these connected lamps. I can ask vision to dim the lights and play songs in the background to set the perfect dinner ambiance. This comes in handy when there are lamps from different brands. There is no need to open 3 different apps to control these individual lamps. -

Breathwork

Runs a Breathing exercise animation on the screen. -

Headless Google Assistant

Why buy when you can assemble of your own! Google has published their Google Assistant SDK free for non-commercial uses. The google assistant is invoked by the "Ok Google" hotword. -

Doggo Cam

I have not been able to put the camera for good use. For now, I just use this to spy on our Doggo for any mischieves he is doing. I am working on adding a facial recognition system which will enable Vision to greet the family members if they are viewing anything on the Command Center.

The following schematics may help give a better idea of all the interconnected systems and services.

Was it worth building?

100% yes! Techniques that I have mentioned here are not new and there are already great apps for everything that’s mentioned here. But there’s no one size fits all solution. Vision helps me show the exact information that interests me without 100 other distractions. The modular architecture helps me to connect new features to it when there’s a new need.

Plus, there is no comparison to the learning and satisfaction that one would get from creating something new, something tangible. The whole process allows you to plan, focus, and enjoy the empowering feeling of creating something on your own.

I will be writing a follow up post with detailed steps if you want to build something like this. The current state of code is not in its best shape to open source it, but that’s the plan!